1、俯瞰

先了解PVFS2的总体设计。

1.1 主要术语介绍

- distributions 数据分布,即逻辑文件与物理文件之间的映射。

- job 一个PVFS操作操作包含多个步骤,每个步骤称为一个job。

- BMI (Buffered Message Interface) 客户端与服务端通讯模块。

- flows 数据流,描述数据从客户端到服务端的过程,该过程不涉及元数据操作。

- flow interface 用来设置flow的接口。

- flow protocol 两个终端通讯协议。

- flow endpoint 源或者目的地。

- flow descriptor flow的数据结构。

- trove 数据存储模块。

- vtags 数据流的版本信息,用于控制一致性。

- instance tag 用于保证数据一致性。

- gossip log用。

1.2 BMI 设计

BMI模块用于客户端与服务端通信,提供消息排序,flow控制功能。所有BMI通信都是非阻塞的。

1.3 状态机

client和sever都用状态机来控制执行状态。

1.4 PVFS request

typedef struct PINT_Request PVFS_Request;可以表示MPI_Datatype能表示的任何数据类型。

typedef struct PINT_Request_state PVFS_Request_state;表示一个request的处理进度。

typedef struct PINT_reqstack PVFS_reqstack,requeststate一起表示状态。

PINT_Request_file_data

PINT_Process_request

PVFS_Request的定义

1 | typedef struct PINT_Request { |

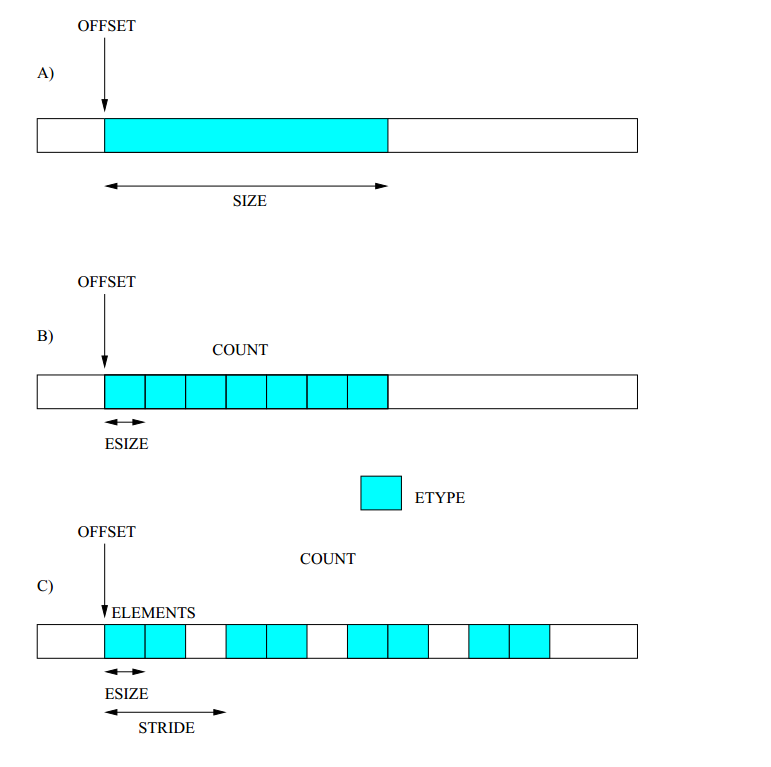

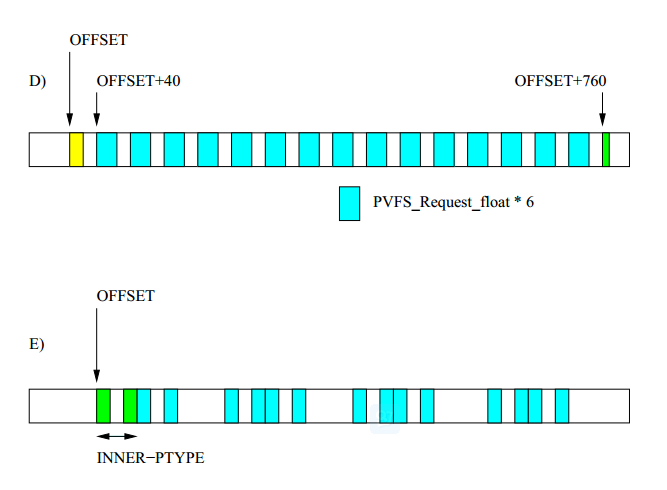

根据下面两张图来理解PINT_Request。

上图中的A,可以表示为:

1 | PTYPE: |

也就是说,A中的request只有一个block,一个block是指一段相同连续数据的集合,A中的block就是个SIZE个PVFS_Requst_byte的集合,PVFS_Requst_byte大小为1。

上图的B,可以表示为:

1 | PTYPE: |

B中的etype是PVFS_Request_int,大小为4,pvfs中的预定义类型的etype都是null。B也只有一个block,block里有COUNT个PVFS_Request_int。depth = etype.depth+1。

上图的C,可以表示为:

1 | PTYPE: |

C有COUNT个block,block之间不是连续的,block之间的间距为STRIDE,STRIDE是两个block其实偏移地址的距离。一个block里面有ELEMENTS个ETYPE,每个ETYPE大小为ESIZE。ub表示数据上界的相对偏移地址,lb为下界,第一块数据lb=0。

上图中的D表示为:

1 | FIRST-PTYPE: |

将request按照block类型分组,总共3组,每一组用stype表示。值得注意的是参数ub,lb,aggregate_size。aggregate_size = ub - lb 。 ub始终为request的最上界,lb为当前组的下界。

上图中的E表示为:

1 | OUTER-PTYPE: |

将request的划分方式又有不同,这次是从外到内,外面的是一组相同的block,里面也是一组相同的block,depth为2。

其他参数暂时不考虑。

2、MPI—IO ADIO

PVFS2有三种接口,其中一种是MPI-IO,被集成在ROMIO的ADIO模块中。

两种函数common和filesystem。common是所有操作的集合,通常strided 操作使用的是common里的方法,每个filesystem子文件夹里都定义自己的io函数,在ADIO_FNS_Operations结构里。ADIO_FileSysType_prefix 检测文件前缀ADIO_ResolveFileType 决定op指向哪个ADIO_FNS_Operations结构,如前缀为“pvfs2:”的指向ADIO_PVFS2_operations。ADIO_PVFS2_operations中的函数指针列表。

1 | struct ADIOI_Fns_struct ADIO_PVFS2_operations = { |

adio-pvfs2 写连续型数据,首先调用 PVFS_Request_contiguous,初始化两个pvfs-request(file,memory),PVFS_Request_contiguous调用关系如下。

1 | /* |

1 | //PVFS_Request_contiguous 函数原型。 |

然后调用 PVFS_sys_write 进行写操作,PVFS_sys_write 调用关系如下。

1 |

|

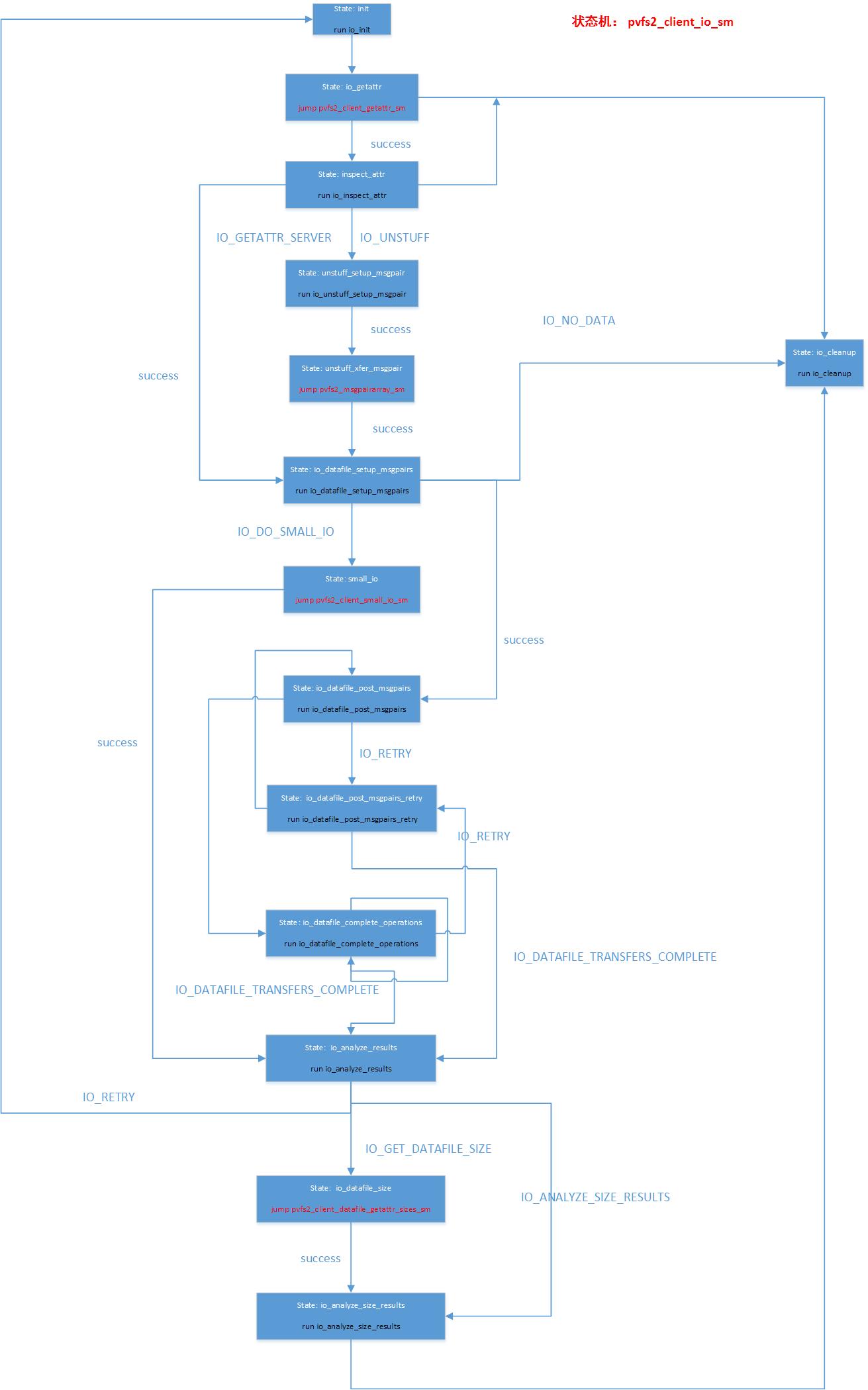

io状态机示意图:

client-sm定义:

1 |

|

io-statemachine的定义:

1 |

|

state-machine control block的定义:

1 | /* State machine control block - one per running instance of a state |

PVFS2中每一种系统操作(VFS文件操作)都对应一种状态机,statemachine。一个运行的状态机实例对应一个状态机控制器,smcb。状态机运行时有两个栈,一个是状态机栈,一个是帧(frame)栈,帧栈保留的是与状态机相关的参数。

1 |

|

PINT_smcb_alloc()根据分配一个smcb,初始化这个smcb,并初始化帧栈。传入函数指针 client_op_state_get_machine,将将其赋值给smcb->op_get_state_machine。调用PINT_state_machine_locate(*smcb),这个函数会调用smcb->op_get_state_machine,也就是 client_op_state_get_machine,根据op找到对应的sm(一个op操作对应一个sm,sm的定义就是.sm后缀文件,也可以看.c文件的声明部分。),并且初始化smcb->current_state。如果sm的初始状态的flag是jump的话,要一直进入到最终的状态机(初始状态不是JUMP动作)中,将最终状态机的first_state作为smcb->current_state。

返回PINT_client_state_machine_post(),该函数实际会调用 PINT_state_machine_start(),即启动IO状态机。 PINT_state_machine_start会调用PINT_state_machine_invoke(),该函数执行current_state的action.func,这样就跳到了状态机的逻辑中。

每一个状态执行开始,第一个动作就是在帧栈里取smcb对应的帧,执行过程中修改帧,如果涉及跳入嵌套状态机,则状态机退出时需要将帧压栈。

根据io状态机的描述,比较重要的几个state是io_datafile_setup_msgpairs,io_datafile_post_msgpairs,io_datafile_complete_operations。

io_datafile_setup_msgpairs对应的执行函数是

io_datafile_setup_msgpairs,其中会调用io_find_target_datafiles,将请求拆分为子请求。io_datafile_post_msgpairs对应的执行函数是

io_datafile_post_msgpairs。io_datafile_complete_operations对应的执行函数是

io_datafile_complete_operations,其中会调用io_post_flow。

io_post_flow 是一个非常重要的函数,其中会初始化cur_ctx,保存flow的上下文,并调用job_flow,job_flow调用PINT_flow_post,通过调用active_flowproto_table[flowproto_id]->flowproto_post(flow_d)正式开始一个io请求。active_flowproto_table[flowproto_id]->flowproto_post(flow_d)函数调用的是flow\Flowproto-bmi-trove\Flowproto-multiqueue.c中的fp_multiqueue_post,fp_multiqueue_post调用mem_to_bmi_callback_fn,该函数调用BMI_post_send_list,BMI_post_send_list调用BMI_tcp_post_send_list,BMI_tcp_post_send_list调用tcp_post_send_generic。

mem_to_bmi_callback_fn中还有个函数PINT_process_request值得注意,PINT_process_request该函数调用PINT_Distribute来更新子请求?。

在mem_to_bmi_callback_fn中用fp_queue_item数据结构来记录flow,q_item->result_chain.result记录实际的请求。

1 | //q_item->result_chain.result的数据类型 |

1 | //cur_ctx初始化 |

PVFS2数据分布的有关函数指针。

1 | /* Distribution functions that must be supplied by each dist implmentation */ |

PINT_flow_post() 是flow发送的函数。BMI_post_send_list()发送

服务端io_send_ack() 到客户端。之后客户端收到这个ack后,服务端客户端开始传输数据。

trove里面有三种模式:null aio(默认) directio

数据结构及函数指针的定义在trove-mgmt.c中。

貌似比较重要的几个文件dbpf-alt-aio.c,dbpf-bstream-aio.c,dbpf-bstream.c,dbpf-context.c,dbpf-collection.c,dbpf-mgmt.c

dbpf-op-queue.c,dbpf-op.c,dbpf-sync.c,dbpf-thread.c

dbpf-alt-aio.c包含两个数据结构的初始化,alt_lio_thread会被调用

1 | static struct dbpf_aio_ops alt_aio_ops = |

dbpf-bstream-aio.c中包含函数:dbpf_bstream_listio_convert,会被调用。

dbpf-bstream.c中包含两个数据结构的初始化。

1 | static struct dbpf_aio_ops aio_ops = |

dbpf-op中dbpf_queued_op_init被调用。

dbpf-sync中dbpf_sync_coalesce_enqueue被调用。